Tech

Microsoft teams up with StopNCII to combat fake nude images

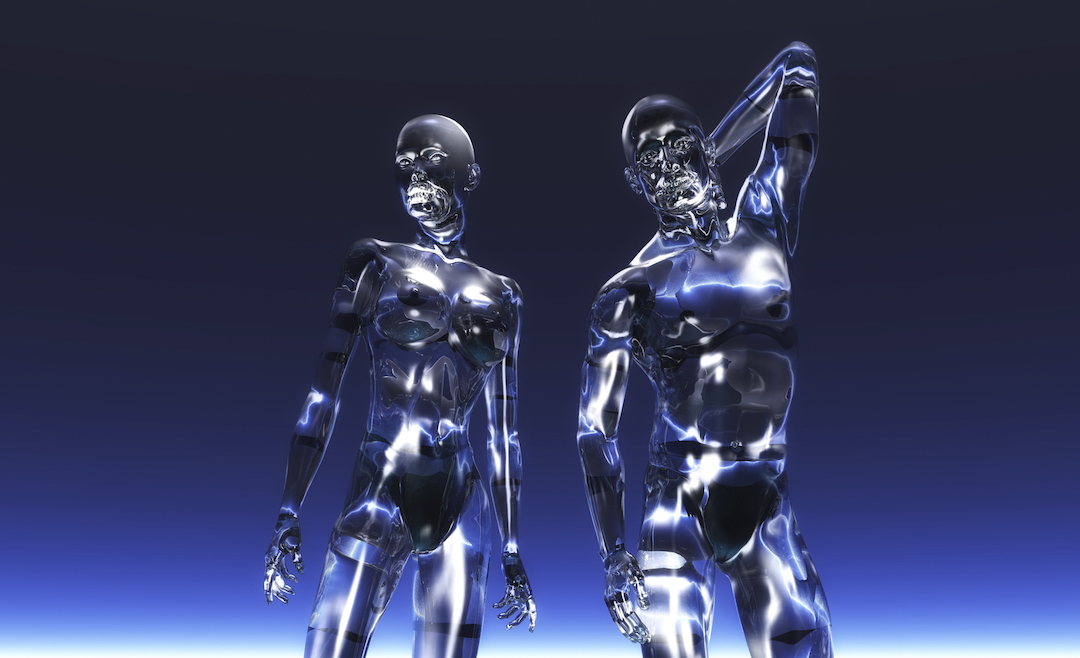

In response to the rising issue of synthetic nude images generated by advanced AI tools, Microsoft has announced a significant move to address the problem.

On Thursday, the tech giant revealed a new partnership with StopNCII, a prominent organization dedicated to combating revenge porn. This collaboration aims to prevent such explicit images from appearing in Bing search results.

StopNCII allows victims to create a digital fingerprint, or “hash,” of explicit images—whether real or generated—on their devices.

This hash is then used to detect and remove these images from partner platforms. Alongside Bing, platforms such as Facebook, Instagram, Threads, TikTok, Snapchat, Reddit, Pornhub, and OnlyFans have integrated StopNCII’s technology to curb the spread of revenge porn.

Microsoft’s initiative follows a successful pilot program that saw the removal of 268,000 explicit images from Bing’s image search by the end of August. Previously, Microsoft provided a direct reporting tool, but this was deemed insufficient for effectively managing the issue.

“We have heard concerns from victims, experts, and other stakeholders that user reporting alone may not scale effectively for impact or adequately address the risk that imagery can be accessed via search,” Microsoft stated in a blog post.

While Microsoft and other platforms are taking proactive measures, Google has faced criticism for not joining StopNCII’s initiative. Google Search offers tools for reporting and removing explicit content but has been criticized for not partnering with StopNCII.

According to a Wired investigation, Google users in South Korea have reported 170,000 links to unwanted sexual content since 2020.

The problem of AI-generated nude images is pervasive and extends to high school students, despite existing tools being limited to individuals over 18. In the U.S., the absence of a federal AI deepfake porn law means that the issue is addressed through a fragmented approach of state and local regulations.