Uncategorized

GPT-3: Here’s what you should know.

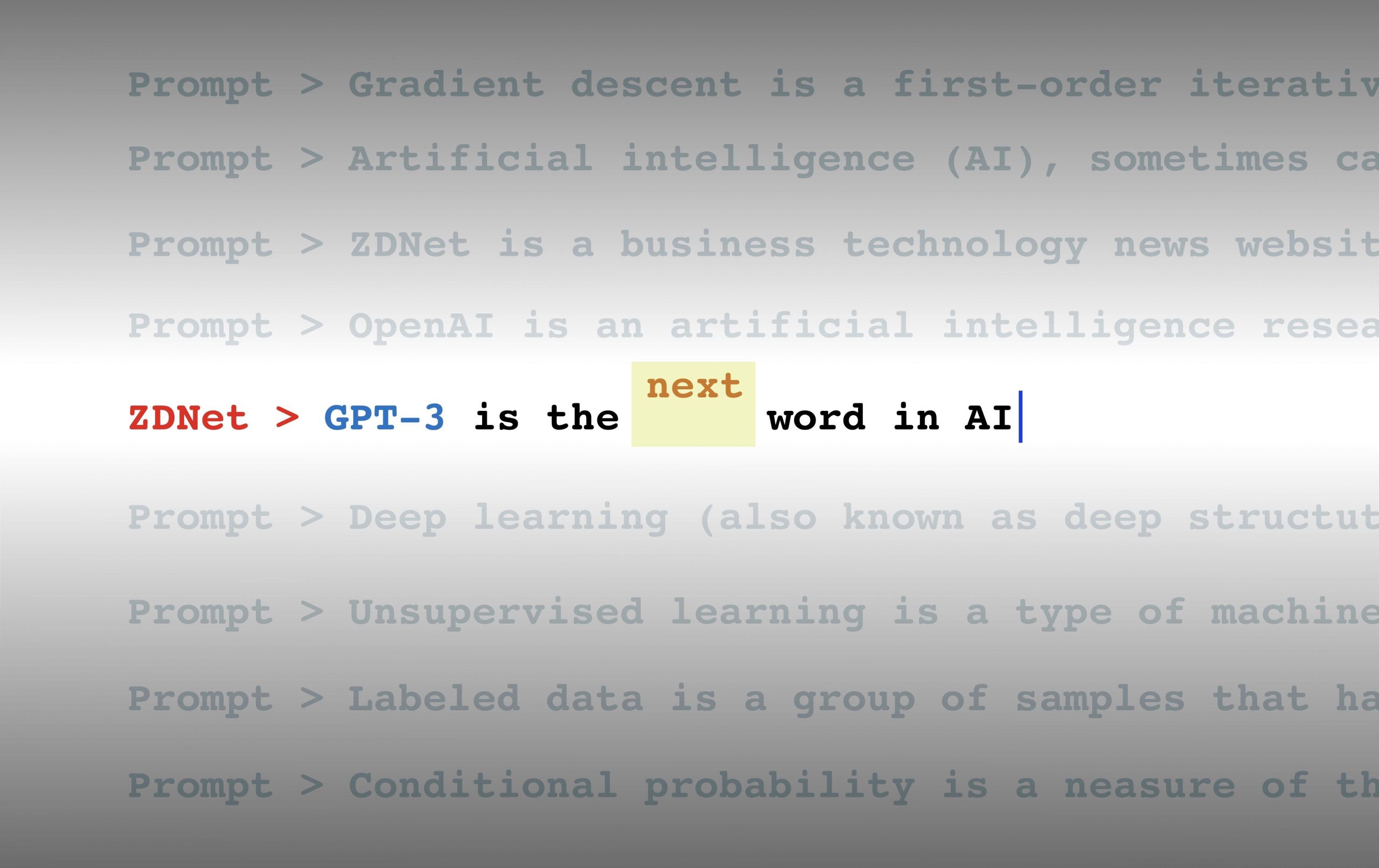

GPT-3 is an autoregressive language model that uses deep learning to produce human-like text. It is the third model in the GPT-n series (natural language processing models).The GPT-n series is created by OpenAI. OpenAI is a San Francisco-based artificial intelligence research laboratory co-founded by Elon Musk.

According to the organization’s website, anyone “Interested in exploring the (GPT-3) API? (Should) Join companies like Algolia, Quizlet, and Reddit, and researchers at institutions like the Middlebury Institute” in the private beta.

The model has a capacity of 175 billion machine learning parameters. OpenAI engineers introduced GPT-3 in May 2020 through an arXiv preprint. By July 2020, it was in beta testing. Before the release of GPT-3, the largest language model was Microsoft‘s Turing NLG, introduced in February 2020, with a capacity of 17 billion parameters – less than a tenth of GPT-3’s.

Enter Wu Dao. The Beijing Academy of Artificial Intelligence (BAAI) recently released details concerning its multimodal AI system, Wu Dao. It can generate text, audio, images, and power virtual idols. It has a capacity of 1.75 trillion parameters.

The GPT-3 model has been criticized by people like Noam Chomsky and Gary Marcus. OpenAI has faced many controversies since its GPT-3 projects. OpenAI restructured to be a for-profit company in 2019. In 2020, open AI entered a licensing agreement that permits it to offer a public-facing API. It allows users to send text to GPT-3 to receive the model’s output, but only Microsoft will have access to the GPT-3’s source code.

The GPT-3 model has currently been used by companies like Microsoft and The Guardian.